At Cyral, one of our many supported deployment mediums is Kubernetes. We use helm to deploy our sidecars on Kubernetes. To ensure high availability we usually have multiple replicas of our sidecar running as a ReplicaSet and the traffic to the sidecar’s replicas is distributed using a load-balancer. As a deliberate design choice we do not specify any specific load balancer leaving it as a choice to the user.

Classic Load Balancing

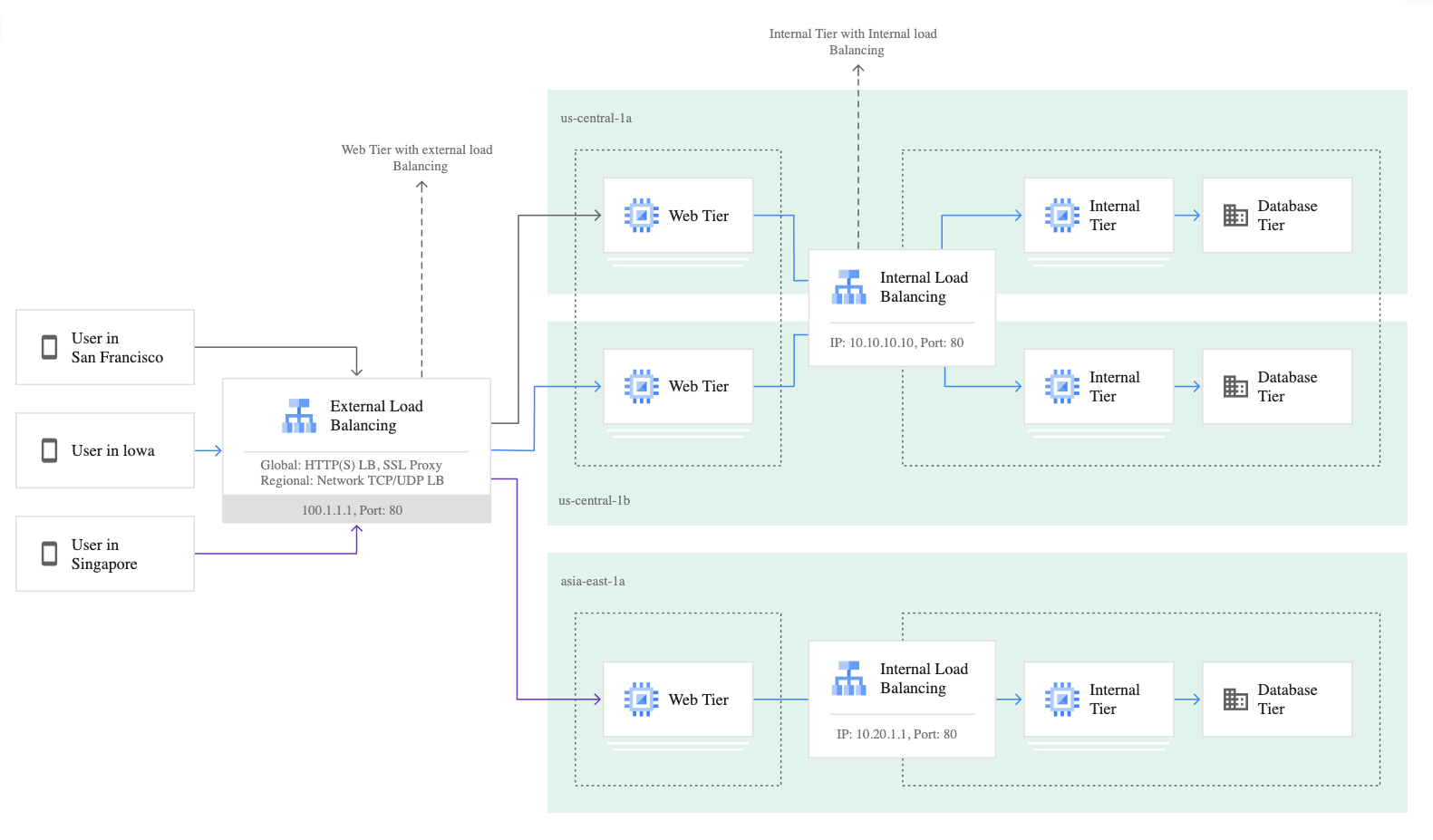

In a distributed system, load balancers could be placed wherever traffic distribution is needed. An n-tiered stack might end up with n load balancers

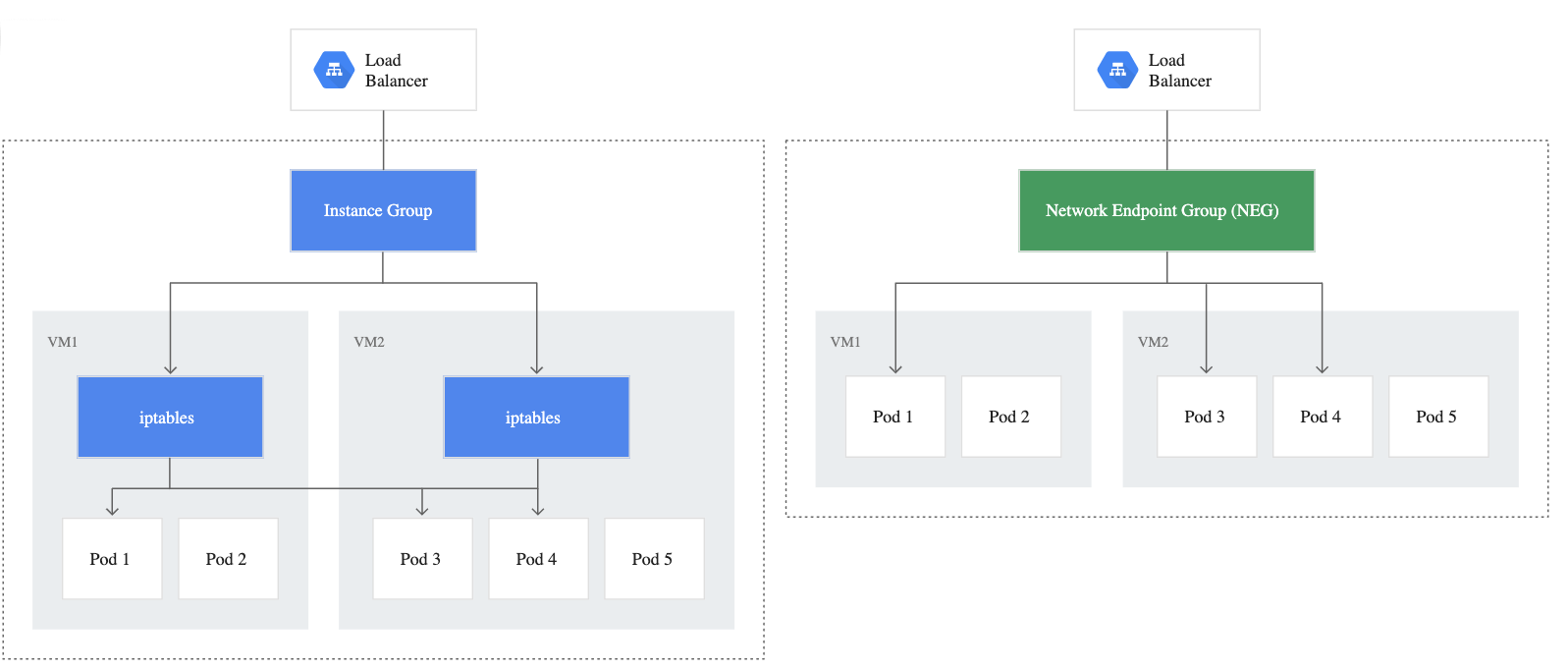

Using load balancers as illustrated above has proven benefits; the above architecture is a popular choice for modern distributed architectures. Traditionally load balancing would assume machines as the targets for load balancing. Traffic originating from outside would be distributed amongst a dynamic pool of machines. However, container orchestration tools like Kubernetes do not provide a one to one mapping between machines and the pods. There may be more than one pod on a machine or the pod which is available to serve traffic may reside on a different machine. Standard load balancers still route the traffic to machine instances where iptables are used to route traffic to individual pods running on these machines. This introduces at least one additional network hop thereby introducing latency in the packet’s journey from load balancer to the pod.

Routing Traffic Directly to Pods

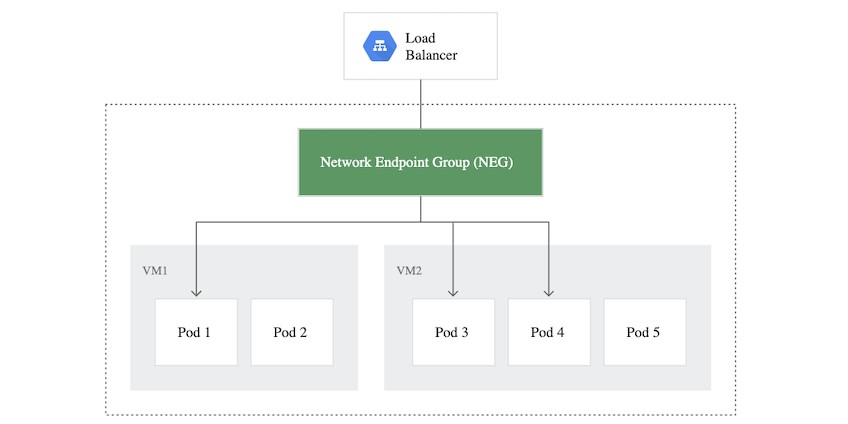

Google introduced Cloud Native Load Balancing at its’ Next 18 event and made it generally available earlier this year. The key concept introduced is a new data model called Network Endpoint Group (NEG) which can utilize different targets for routing traffic instead of routing traffic only to machines. One of the possible targets is the pod handling the traffic for service. So, instead of routing to the machine and then relying on iptables to route to the pod as illustrated above; with NEGs the traffic goes straight to the pod. This leads to decreased latency and an increase in throughput when compared to traffic routed with vanilla load balancers.

To reduce the hops to a minimum, we utilized Google Cloud Platform’s (GCP) internal load balancer and configured it with NEGs to route database traffic directly to our pods servicing the traffic. In our tests, the above combination led to a significant gain in performance of our sidecar for both encrypted and unencrypted traffic.

Real World Example of NEG

As mentioned above, we use helm to deploy on Kubernetes. We use annotations passed via values files to helm to configure our charts for cloud specific deployments. However, for this post we’ll be using plain kubernetes configuration files since they provide a clearer view of kubernetes concepts. The following kubernetes configuration is an example of running an nginx deployment with 5 replicas.

# Service

apiVersion: v1

kind: Service

metadata:

name: nginx-internal-example

annotations:

cloud.google.com/load-balancer-type: "Internal"

cloud.google.com/neg: '{

"exposed_ports":{

"80":{}

}

}'

spec:

type: LoadBalancer

ports:

- port: 80

targetPort: 80

protocol: TCP

name: http-port

selector:

run: neg-routing-enabled

---

# Deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

selector:

matchLabels:

run: neg-routing-enabled

replicas: 5 # tells deployment to run 5 pods matching the template

template:

metadata:

labels:

run: neg-routing-enabled

spec:

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80As shown above, port 80 is mapped as NEG endpoints and corresponding NEG endpoints are created.

In the example above the annotation:

cloud.google.com/load-balancer-type: "Internal"configures the load-balancer to be an internal load balancer on GCP. The mapping of ports to Network Endpoint Groups is done with the following annotation:

cloud.google.com/neg: '{

"exposed_ports":{

"80":{}

}

}' Notes on running the example on GCP:

Running an NEG deployment as an internal load balancer on GCP requires explicit firewall rules for health and reachability checks. The documentation describing this process is here

Here’s the yaml for the running service as an internal load balancer. To get this using kubectl run:

kubectl get service nginx-internal-example -o yamlOutput:

apiVersion: v1

kind: Service

metadata:

annotations:

cloud.google.com/load-balancer-type: Internal

cloud.google.com/neg: '{ "exposed_ports":{ "80":{} } }'

cloud.google.com/neg-status: '{"network_endpoint_groups":{"80":"k8s1-53567703-default-nginx-internal-example-80-8869d138"},"zones":["us-central1-c"]}'

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"Service","metadata":{"annotations":{"cloud.google.com/load-balancer-type":"Internal","cloud.google.com/neg":"{ \"exposed_ports\":{ \"80\":{} } }"},"name":"nginx-internal-example","namespace":"default"},"spec":{"ports":[{"name":"http-port","port":80,"protocol":"TCP","targetPort":80}],"selector":{"run":"neg-routing-enabled"},"type":"LoadBalancer"}}

creationTimestamp: "2020-08-05T22:52:31Z"

finalizers:

- service.kubernetes.io/load-balancer-cleanup

name: nginx-internal-example

namespace: default

resourceVersion: "388391"

selfLink: /api/v1/namespaces/default/services/nginx-internal-example

uid: d6e52c50-bf46-4dc3-99a0-7746065b8e6f

spec:

clusterIP: 10.0.11.168

externalTrafficPolicy: Cluster

ports:

- name: http-port

nodePort: 32431

port: 80

protocol: TCP

targetPort: 80

selector:

run: neg-routing-enabled

sessionAffinity: None

type: LoadBalancer

status:

loadBalancer:

ingress:

- ip: 10.128.0.6Challenges and Limitations

NEG is a relatively new concept hence the tooling around it is still evolving. We had to work around some quirks to get NEG working correctly for helm deployments. For example, if you have multiple ports on your container and you’d like the user to be able to configure them at install time by providing a list of ports then getting the annotation for NEG right can be tricky because of the expected format of NEG annotation. We use helm’s built in first and rest method for splitting the list of ports passed to match the expected format. The following is an example from our helm charts:

annotations:

cloud.google.com/load-balancer-type: "Internal"

cloud.google.com/neg: '{

"exposed_ports":{

{{- $tail := rest $.Values.serviceSidecarData.dataPorts -}}

{{- range $tail }}

"{{ . }}":{},

{{- end }}

"{{ first $.Values.serviceSidecarData.dataPorts }}":{}

}

}'Another challenge we faced was Google’s internal load balancer’s limit of five ports; which restricts our ability to handle traffic from multiple databases with a single sidecar deployment if an internal load balancer is used. Zonal NEGs are not available as a backend for Internal TCP load balancers or external TCP/UDP network load balancers on GCP.

Further Reading on Network Endpoint Groups

- Google’s Medium post on NEG

- NEG supports multiple backends including Internet, Zonal or Serverless groups. Detailed documentation is available here

- A comparison of NEG performance when used with http traffic by Cloud Ace